Purpose-Built AI Tools for Research vs General-Purpose LLMs: What’s the Difference?

By Insight Platforms

- article

- Artificial Intelligence

- Generative AI

- Conversational AI

- Qualitative Data Analysis

- Survey Analysis

- Text Analytics

- Verbatim Response Coding

- Knowledge Management

- Synthetic Respondents

When LLMs are so powerful, why do we need purpose-built AI tools for research and insights? There are literally hundreds of them.

AI Tools for Research & Insights: Market Landscape

The answer to the question is nuanced. The devil is often in the details.

So, we asked a number of experts across different research and insights capabilities to share their views.

We ended up with quite a long article.

In the first part, we discuss some of the use cases and advantages of General-Purpose LLMs for five different market research applications of AI.

Next, we explore what we mean by purpose-built AI tools. These can be grouped into three categories, each with its own benefits and trade-offs.

Finally, we revisit each of the five use cases to explore the specific advantages that AI tools provide.

Many thanks to all our contributing specialists. You can find out more about the companies behind these insights in the article below.

Stay up to date

Subscribe to receive the Research Tools Radar and essential email updates from Insight Platforms.

Your email subscriptions are subject to the Insight Platforms Site Terms and Privacy Policy.

Using General-Purpose LLMs for Market Research

General-purpose LLMs are amazing. OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Gemini and Microsoft’s Copilot all have powerful uses for researchers and insight professionals. They can do things we couldn’t imagine delegating to computers only a couple of years ago.

Let’s look at some example use cases.

1. Qualitative Data Analysis

LLMs can transform the traditionally labour-intensive process of qualitative transcript analysis into an efficient, scalable workflow.

Theming & clustering: qualitative data from interviews or focus groups can be automatically clustered by applying thematic or content analysis, revealing patterns and trends without requiring as much manual coding.

Summarisation: LLMs can quickly build on these themes and clusters to generate reports and summaries from all this unstructured input.

Evidence generation: AI can find relevant quotes from text or video transcripts and even create showreels to support the insights generated in a summary report.

2. Quantitative Analysis of Survey Data

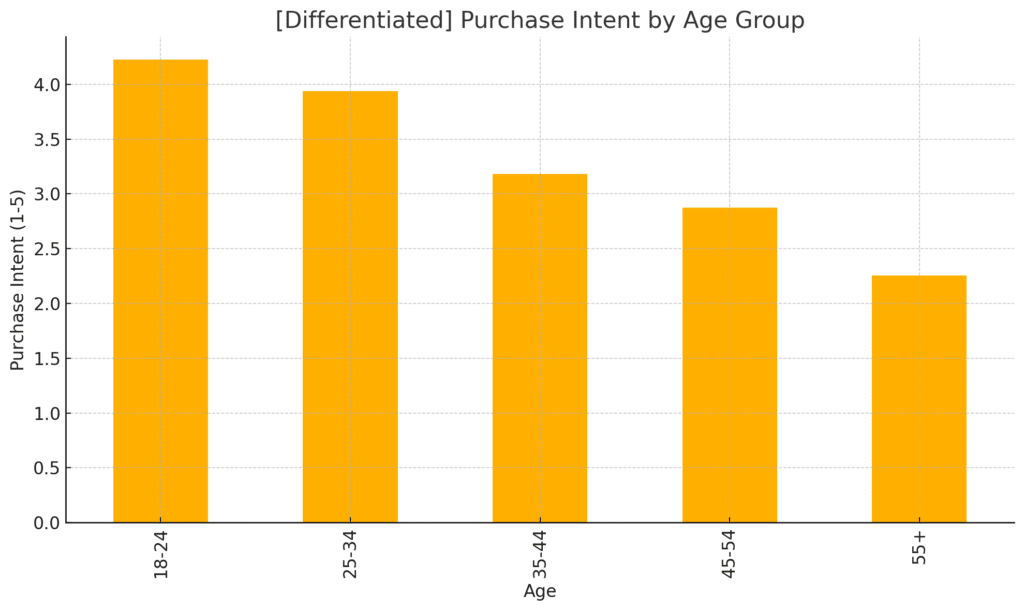

If you have a CSV file with survey results you can now upload it into ChatGPT or Claude and conduct a wide range of analysis and reporting.

Generate descriptive statistics and cross-tabulations: run frequency counts, percentages, mean / median / mode calculations.

Generate data visualisations: produce simple or complex graphics depending on the analysis requirements.

Conduct more advanced analysis: Examples include segmentations (“Segment the survey data into distinct groups based on customer attitudes”) or predictive analytics (“Based on this data, can you forecast the likely values for the next 12 months?”).

3. Coding Open-Ended Survey Responses

Open-ended survey responses often contain rich but unstructured data that can be time-consuming or costly to analyse manually.

Generic LLMs can simplify this process.

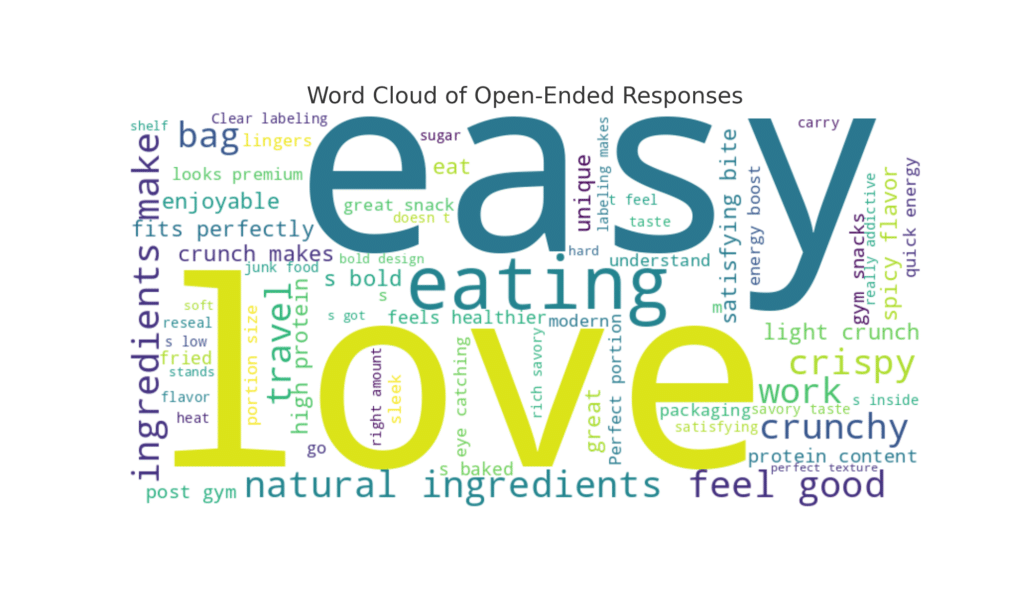

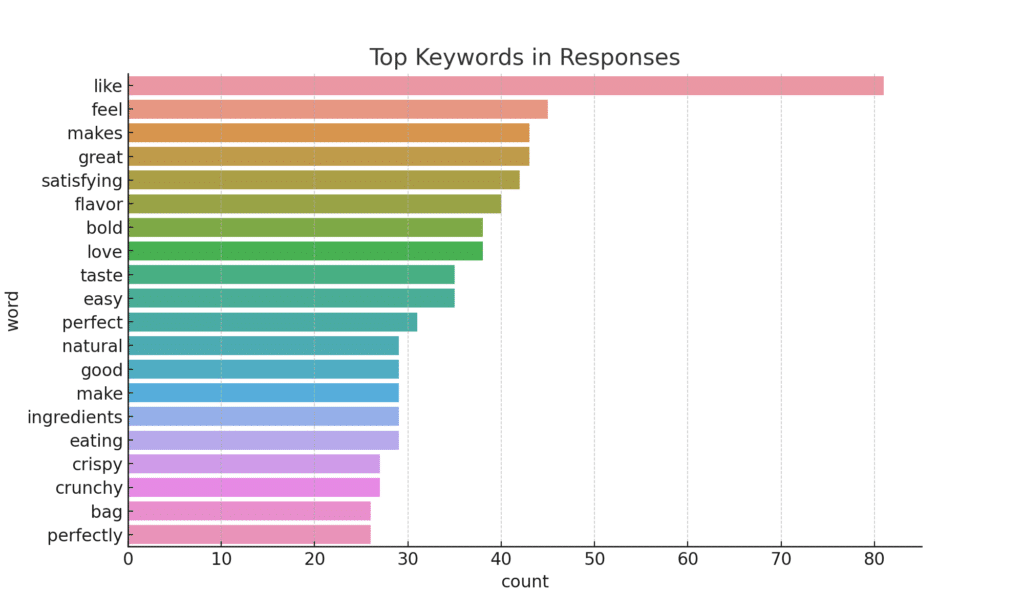

Thematic analysis / topic clustering: identify recurring themes, group similar responses, label and summarise key topic.

Sentiment analysis: score feedback based on how positive, negative, or neutral it is; break down sentiment by subgroup.

Keyword frequency counts & word clouds: extract, rank and visualise the most common keywords and phrases.

4. Generating Synthetic Personas

Synthetic personas can be generated using LLMs by combining their existing knowledge and training data with additional context that you upload – for example research reports, interview transcripts or internal documents.

The LLM can then adopt a specific persona in order to answer questions and interact using its knowledge base.

Use cases for this might include:

Testing hypotheses: to help develop new product ideas or marketing concepts (“What do you think of this idea?”, “How might you use this?”).

Simulating customer interviews: using synthetic personas to pilot survey designs or qualitative discussion guide drafts in order to refine them.

Journey mapping: to find areas of friction or opportunity in the customer experience (“Tell me about the last time you made a purchase like this”.

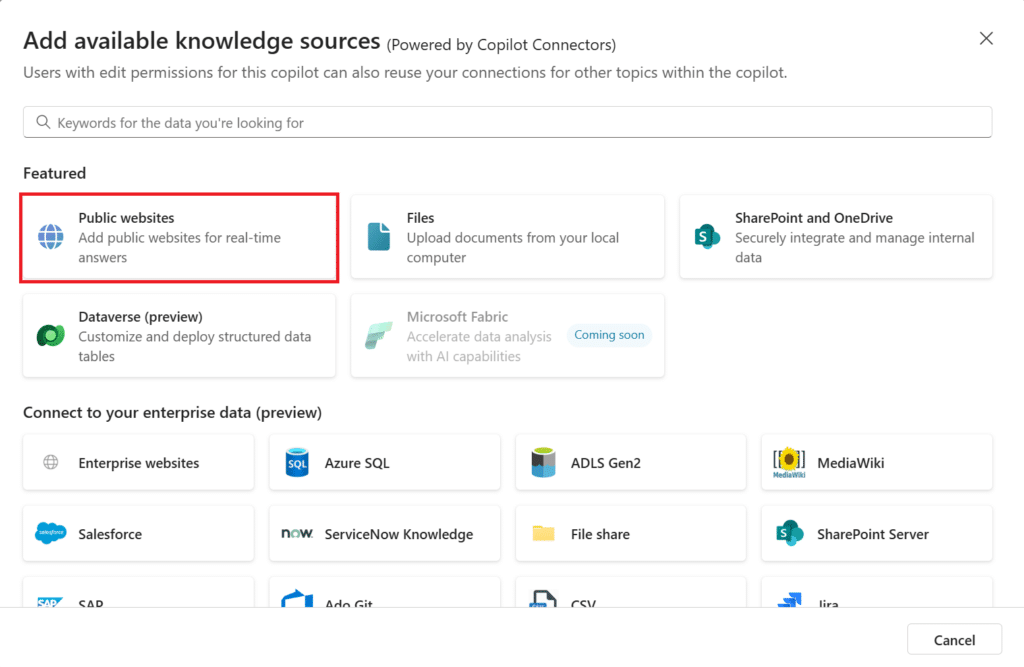

5. Creating Insights Knowledge Repositories

Tools like Microsoft’s Copilot and Google’s Gemini can integrate with enterprise knowledge sources in OneDrive, Google Drive, CRM systems, or other data sources.

This data can then be queried by users who may or may not be research / insights experts. Advantages include:

Conversational search: natural language interfaces make it much easier for users to query data.

Combination and integration: access to many different types of data and documents, both structured and unstructured.

Synthesis and summarisation: LLM tools are very effective at generating summaries based on a variety of inputs.

Given the power and ease of use of general-purpose LLMs across these five categories, what’s the point of purpose-built AI tools for research and insights?

What are Purpose-Built AI Tools for Research?

The following thoughts are from Alok Jain of Reveal

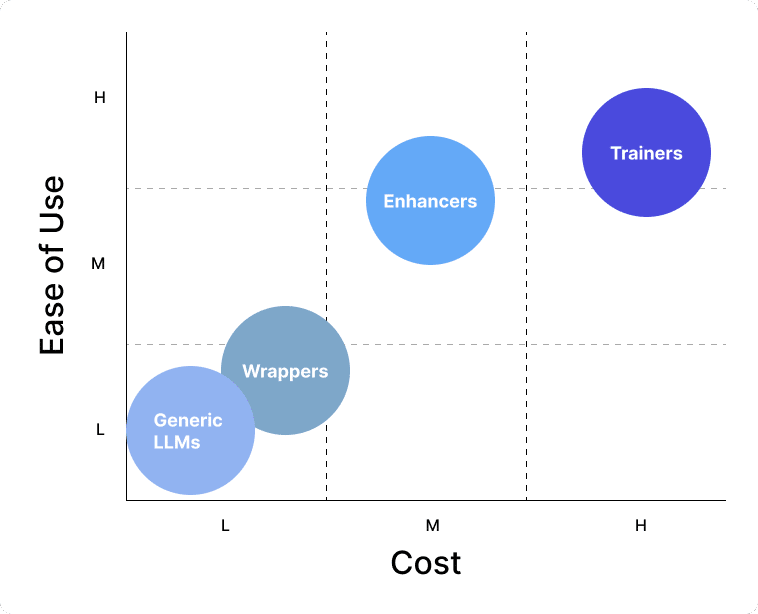

Purpose-built AI tools for research fall into three broad categories: Wrappers, Enhancers, and Trainers. Each offers distinct advantages and limitations from the standpoint of the researcher using them.

1. Wrappers: Simple but Limited

Wrappers function as saved prompts that call an LLM to analyse qualitative data. Researchers input raw data into the tool, which then uses predefined prompts to interact with the LLM. The tool outputs insights based on these prompts, which researchers can use directly or refine further.

Strengths:

Ease of use: Wrappers provide a straightforward way to leverage LLM capabilities without needing expertise in prompt engineering. They allow researchers to quickly analyse qualitative data without much setup.

Speed: these tools eliminate the trial-and-error process of crafting effective prompts, allowing researchers to get insights faster.

Cost-effective: since Wrappers rely on existing LLMs without major modifications, they are usually affordable and accessible for a wide range of research needs.

Weaknesses:

Limited adaptability: Wrappers operate on static prompts, which may not fully capture the complexity of certain research tasks or adapt to evolving insights.

Lower quality or surface-level output: because Wrappers rely on static prompts without validation or refinement, the insights they generate may be shallow, generic, or lacking in-depth analysis, requiring researchers to supplement or refine findings manually.

Lack of good workflows: Wrappers often lack structured workflows designed to make a researcher’s job easier. This means that researchers may still need to invest significant time in organising data, interpreting results, and refining outputs, putting more work on their side.

2. Enhancers: Balancing Flexibility and Control

Enhancers enhance the functionality of LLMs by preparing and structuring data for analysis. They provide workflows and guidelines that ensure the AI outputs follow a specific process.

Additionally, Enhancers validate the AI-generated results, refining the insights to meet the researcher’s objectives. Researchers interact with the AI through these structured systems to ensure consistent, quality results.

Most AI agents today fall into this category.

Strengths:

Ease of use: Enhancers are designed to align with researchers’ workflows, making AI more intuitive and reducing the effort needed to integrate it into existing research processes.

More reliable AI guidance: Enhancers provide structured workflows that ensure research questions are addressed systematically, reducing the risk of irrelevant or incomplete responses.

Quality control: by validating and refining AI-generated outputs, Enhancers help researchers maintain accuracy and reliability in their findings.

Better data preparation: these tools assist in organising and structuring raw data before analysis, reducing the burden on researchers.

Quality of insights: Enhancers deliver substantially higher quality insights compared to Wrappers or generic LLMs due to their comprehensive approach to the research process including data preparation, multi-step analysis and quality checks.

Weaknesses:

Learning curve: while Enhancers make AI more useful, researchers may need to familiarise themselves with how to use them effectively.

Dependence on LLM accuracy: the quality of insights still depends on the foundational LLM’s capabilities, which may not always align with specific research needs.

Higher cost than Wrappers: the additional features and validation processes come at a higher price, which may not be feasible for all research teams. Some Enhancer tools attempt to reduce costs by limiting the amount of data sent to the LLM, but this can sometimes compromise the quality and depth of analysis.

3. Trainers: Maximum Customisation but High Investment

Trainers do not rely solely on the out-of-the-box knowledge of an LLM but instead train AI models on proprietary datasets, allowing for highly customised insights tailored to specific research needs.

Strengths:

Highly tailored insights: Trainers allow businesses to build AI models trained on proprietary datasets, ensuring domain-specific accuracy and relevance.

Competitive advantage: custom-trained models provide deeper, more nuanced insights that generic LLMs cannot replicate, making them ideal for organisations needing highly specialised research capabilities.

Control over AI behaviour: researchers can fine-tune AI to align with their methodologies, reducing the risk of generic or misinterpreted results.

Weaknesses:

Resource intensive: training AI models requires a significant investment in data collection, processing, and technical expertise.

Longer implementation time: unlike Wrappers and Enhancers, which can be used immediately, Trainers require months of training and refinement.

Higher cost: the financial investment needed to develop and maintain a custom AI model may be prohibitive for smaller research teams or short-term projects.

Data privacy concerns: since Trainers require extensive proprietary data to function effectively, organisations must ensure robust data security and compliance measures to protect sensitive information from breaches or misuse.

Comparing Purpose-Built AI Tools for Research with the Generic LLMs

Generic LLMs such as GPT-4o and Claude offer impressive capabilities but present distinct challenges for market researchers when used in their standard interface forms.

Limitations of Generic LLM Interfaces

These models typically rely on simple chat interfaces that require researchers to manually craft and refine prompts for each analysis task. This approach creates several inefficiencies when compared with purpose-built AI tools for research.

Repetitive prompt engineering: researchers must recreate and re-enter effective prompts for similar analyses across different projects, leading to inconsistent methodologies and wasted time.

Manual data preparation: raw market research data must be cleaned, formatted, and contextualised by researchers before being input into the LLM, often requiring significant preprocessing work.

Lack of structured workflows: without built-in research frameworks, researchers must design and implement their own methodological approaches, potentially missing important analytical steps.

Absence of validation mechanisms: the responsibility for verifying the accuracy and reliability of AI-generated insights falls entirely on the researcher, increasing the risk of undetected errors or biases.

Limited data handling: standard interfaces often restrict the amount of data that can be analysed at once, forcing researchers to segment larger datasets and manually synthesise findings.

Evaluating Purpose-Built AI Tools for Research: The Cost vs. Effort Trade-off

We can narrow down the choice parameters to two critical factors that most market research teams must balance: the financial cost of the tool and the researcher effort needed to obtain high-quality output. This framework provides a practical lens through which to evaluate different AI options.

The visualisation below maps the four categories of AI tools across these two dimensions:

- Cost: the financial investment required (from Low to High).

- Researcher effort: the time and expertise needed from the research team to produce quality insights (from High to Low).

As illustrated, there’s a clear correlation between higher tool costs and reduced researcher effort requirements. Organisations must determine where on this spectrum they can optimise their resources based on their specific research needs, budget constraints, and available expertise.

The Hidden Costs

While generic LLMs appear cost-effective at first glance, the significant researcher time and expertise required to use them effectively represents a substantial hidden cost. Organisations must weigh the lower subscription or API fees against the increased labour costs and potential quality inconsistencies.

For occasional or exploratory market research needs, generic LLMs may be sufficient. However, for organisations conducting regular, in-depth market analyses, the cumulative inefficiencies of using generic interfaces can quickly outweigh their initial cost advantage compared to specialised tools.

This mapping reveals how purpose-built AI tools for research create value by reducing the burden on researchers despite their higher price points. When evaluating the total cost of ownership, organisations should consider not only the direct tool costs but also the implicit costs of researcher time and expertise required to achieve the desired quality of insights.

Read more about Alok & Reveal

Alok Jain

Reveal

Comparing Purpose-Built AI Tools for Research with Generic LLMs: Five Use Cases

1. Qualitative Data Analysis

The following thoughts are from Annie McDannald of Quillit by Civicom

The primary benefit to working with a purpose-built tool for research is the value of the extensive, advanced backend prompt engineering.

From the time ChatGPT initially hit the market, a key challenge users faced was understanding the nuances in the art of prompting the AI to deliver comprehensive and valuable responses. Many researchers don’t have the time or interest to devote to becoming skilled prompt engineers.

As we’ve developed Quillit, we’ve gained extensive skill and experience optimising the advanced prompt engineering on the backend and are constantly refining and improving these complex prompts based on actual client feedback on response accuracy.

Security is a secondary point of contention.

A purpose-built AI tool for research has an opportunity to refine security measures, features, and protocols to successfully adhere to the data privacy and security requirements that are specific to research and research participants.

A base LLM is looking through a broader lens when it comes to security, and research in specific industries (ie, pharma, healthcare, tech) require very stringent and specific security measures to be in place. Researchers are likely to have more reliable security compliance when utilising a restech tool.

Read more about Annie and Quillit AI by Civicom

Annie McDannald

Quillit ai

The following thoughts are from Jack Bowen of CoLoop

Why choose a specialised tool?

Performance: specialised tools are designed to do one thing well. That means providers can layer in tests, validations, and safeguards — giving consistent, trustworthy outputs. General-purpose LLMs often rely on open-ended, step-by-step reasoning (e.g. “agentic” processes), These increase the chance of things going off-track. Every extra step is another coin toss.

I spoke to a policy research firm recently who run 21,000 interviews per year — they explained a 99% success rate at using a tool means 210 interviews went wrong with potentially serious consequences if they provide erroneous advice. Non-standardised processes are a huge risk — there are no standard ways of using generic chatbots.

Adoption: organisations want solutions that fit right into existing workflows. Generic LLMs are powerful, but they often require new mental models, training, and confidence. Tools with specific use cases, guardrails, and interfaces that mirror existing ways of working get adopted faster — and start creating competitive advantage sooner for those that adopt them.

Future proofing: specialised tools will get better as time goes on and more optimised for the task their built for — even as those industries change. Generic LLM interfaces are built for mass scale use cases and will likely not improve in the ways you need them to even if they might have in the past.

Support: buyers like managed offerings in many cases. When something goes wrong there’s insurance, customer success or support to talk to who have a deep understanding of your industry and problem. Generic LLM interfaces are broad, horizontal and therefore not able to offer this.

Long term I think we’ll see AI become “just software” — the way early SaaS tools were mostly wrappers around databases. Yes, you can build anything with Excel or Airtable and Zapier, but people don’t, because they value time, support, and focus.

Read more about Jack and CoLoop

Jack Bowen

2. Quantitative Analysis of Survey Data

The following thoughts are from Guillaume Aimetti of Inspirient

LLMs are the right tools for generating summaries from results, but NOT for analysis – for a number of key reasons.

LLMs are great for conversations — not calculations!

They’re built for language, not math, and will “hallucinate” results. Leading LLMs nowadays have a 1 in 2 chance of getting a MATH problem wrong1. Also, don’t use LLMs to write code to do math, because no one will ever validate the generated code for you2!

Quantitative analysis needs precision, transparency, and repeatability:

LLMs can generate outputs that “sound right” but are statistically wrong, which means you can easily be fooled into a sense of accuracy but still need to calculate every number manually to verify correctness.

LLMs deliberately introduce randomness to make the responses more “human.”, i.e., two runs on the same prompt can give different results.

And because their reasoning is opaque, there’s no way to trace how they reached a conclusion — which makes it risky to base business decisions on their output.

Specialised tools are the right tools for relevant analysis but they fall short in some important aspects.

Flexibility and adaptability

Specialised tools are often hard-coded for specific types of data or questions — stray outside their comfort zone and they can’t cope.

Interpretation and storytelling

Even the best analysis is useless if no one understands it. Specialised tools often lack the natural-language capabilities to communicate results clearly or answer follow-up questions.

The optimal solution is not one or the other but rather a combination of a specialised tool for analysis and visualisation with LLMs for text generation and storytelling. This is how we built Inspirient.

1Gemini: A Family of Highly Capable Multimodal Models. Google (via arXiv, Jun 2024)

2An Empirical Study of the Characteristics of ChatGPT Answers to Stack Overflow Question. Purdue (via arXiv, Aug 2023)

Read more about Guillaume and Inspirient

Guillaume Aimetti

Inspirient

The following thoughts are from Ray Poynter of ResearchWiseAI

One general rule that seems to be emerging from using LLMs is that you should only use them for tasks you could have done yourself. They can speed you up, but you have to check they have done it correctly. This is why people talk about guard rails, things that stop you from making mistakes.

In the case of analysing survey data, purpose-built AI tools for research, such as our own ResearchWiseAI do not require the user to be so knowledgeable because they are less flexible, which is a way of saying they have strong guard rails. A Python-enabled LLM, such as ChatGPT, will allow you to conduct a much wider range of analyses than a specialised tool, but it will require that you are knowledgeable and alert. TBH, I often use an LLM to do advanced analysis, but I can also write code to do the analysis and I am trading flexibility and speed against the need to be vigilant.

Read more about Ray and ResearchWiseAI

Ray Poynter

ResearchWiseAI

3. Coding Open-Ended Survey Responses

The following thoughts are from Michiah Prull and Tovah Paglaro of Fathom

LLMs are good at generating summaries. But summaries don’t incorporate your strategy or give you the control to be able to nuance and refine, they don’t have the rigour of accurate binary coding, and they don’t set you up to be able to analyse the coded data with confidence. Sometimes that’s fine. But when you want to leverage open-ends to find drivers of behaviour, analyse trends over time, or compare the detailed “why” for key segments – you need a purpose-built tool that delivers for that.

If you just need a qualitative gist, generic LLMs can be great — but for precision, detail, and control, you need a specialised tool, with specialised engineering, workflow, and interface design to harness AI for the task.

AI data analysis is like design: if you just need a quick image, ChatGPT can do the trick—but if you’re a professional who needs precision and control, you need specialised tools like Photoshop or Fathom.

One of the biggest challenges with generic LLMs is customisation—it’s like trying to fix an AI-generated image: the first draft is sometimes close, but each prompt to improve it only makes things worse till you give up from sheer exhaustion.

Read more about Michiah, Tovah and Fathom

Michiah Prull

Tovah Paglaro

The following thoughts are from Maurice Gonzenbach of Caplena

LLMs are an easy choice to get started quickly and probably a good choice when looking at small sample sizes (up to 150 respondents) where you just want a quick “back-of-the-envelope” result.

For larger studies or tracking studies where you want reliable quantification, they’re still an option, but then you need a much more elaborate process than just pasting the rows into ChatGPT and asking for coding.

Specifically, you need to tackle several key challenges.

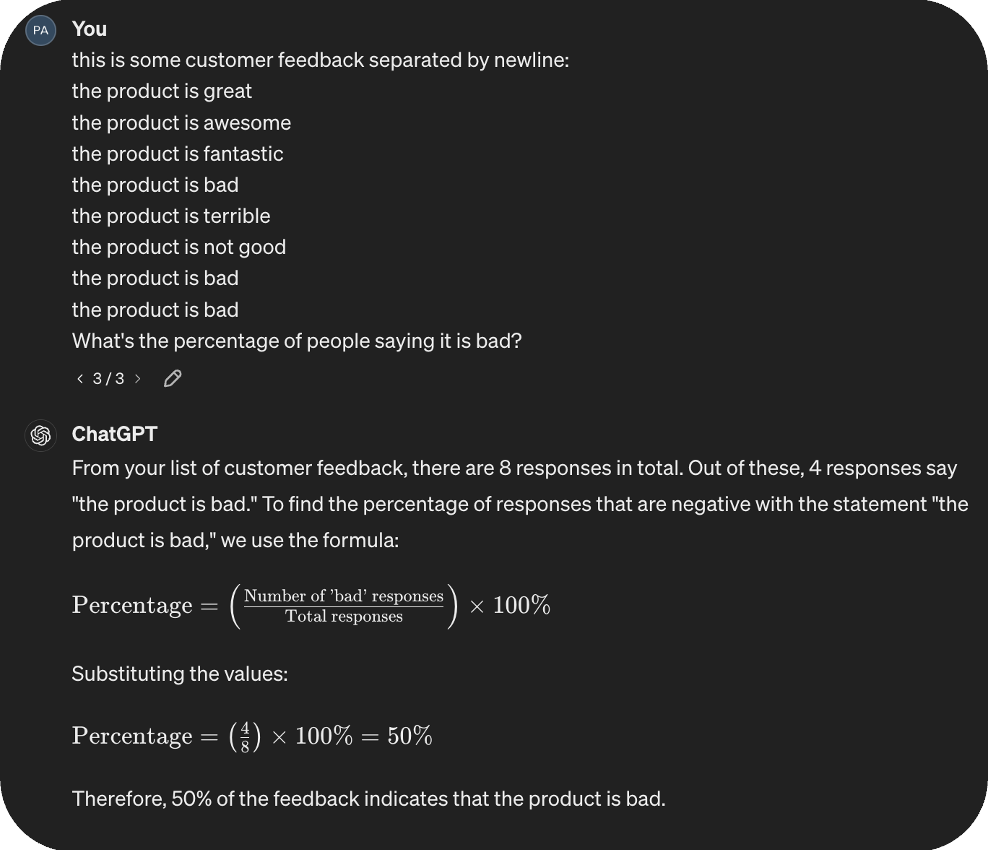

Data Analysis

LLMs are inherently bad at quantification (see screenshot), especially with larger amounts of rows. It might get it right with only a few (dozen) rows, but at the very latest, when you feed in more than 100 rows at the time, things start to get very flaky. Therefore, you have to create a process of feeding data in small batches. And as soon as you get past a few batches, you’ll probably need to use the API for that.

Accuracy & Quality Assurance

While today’s LLMs have a lot of world knowledge out of the box and will get you to reasonable results very quickly, chances are still high that you want the coding to be done in a different way sometimes because of your industry / project / company knowledge.

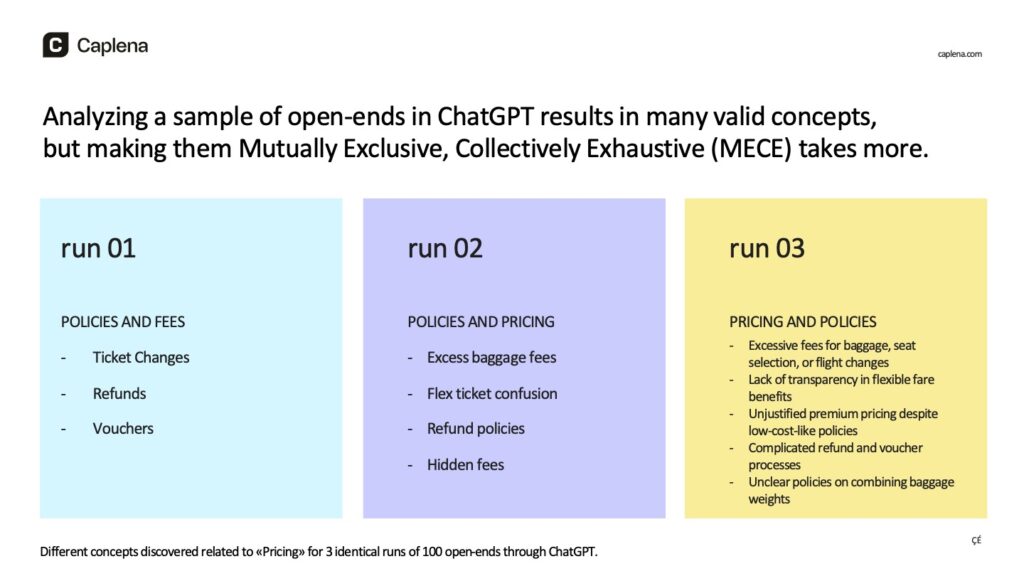

When interacting with LLMs directly, it is very difficult to gauge the quality of the coding due to the random nature of LLMs (every run will yield different results) and even harder to fine-tune these LLMs to your specific project.

Speed & Reliability

If you want to do coding on a professional level, you will need to feed in both the codebook and the OE to the LLM in every batch. This doesn’t scale well and will quickly add up to a lot of waiting time if you get towards 1000 or more rows (you’l easily wait for 20 minutes for such a sample size). Errors and outliers will happen and will need to be taken care of.

And probably you will find things you want to change after the first run, so chances are high you’ll do this process multiple times. See visual below illustrating the different outputs you might get when using LLMs to do topic modelling – all are valid, but you need a process to handle / aggregate different outputs.

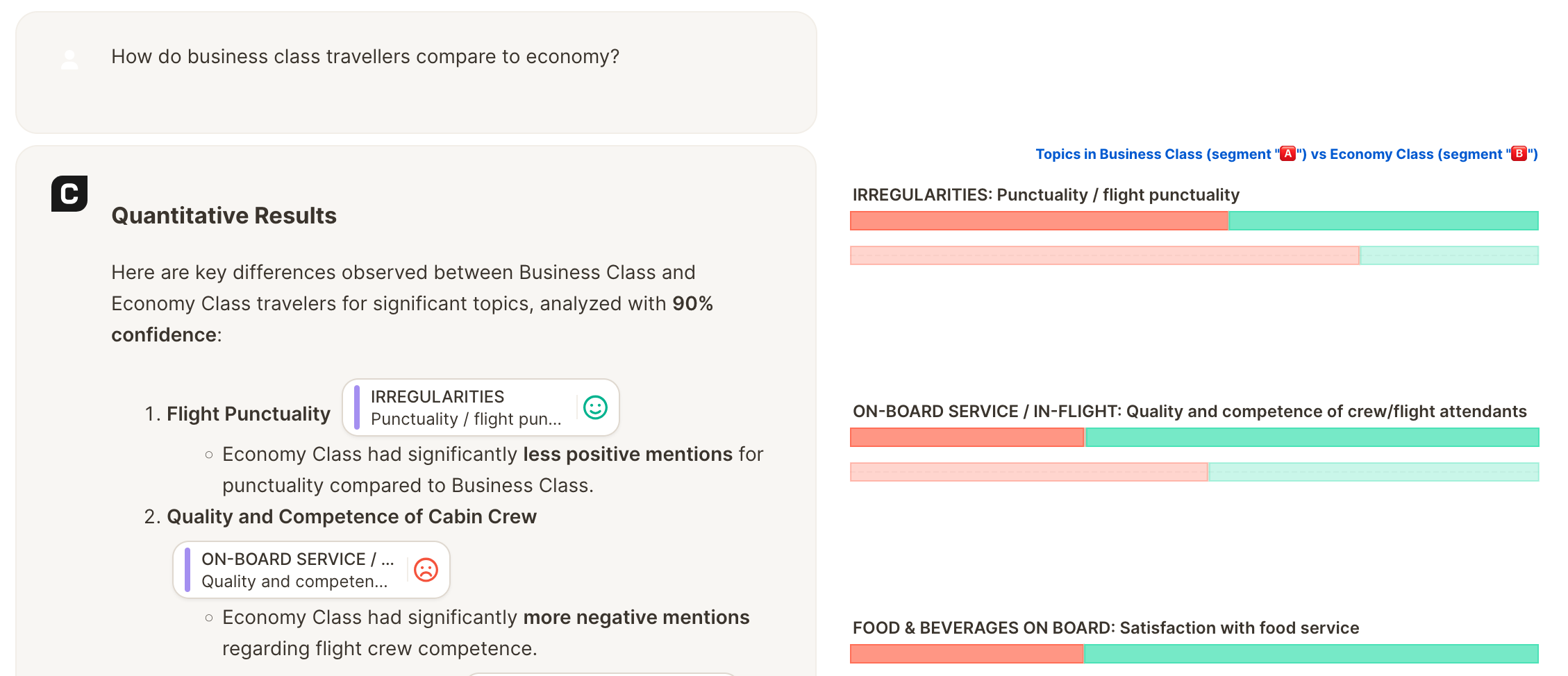

Purpose-built AI tools for research and analytics should offer you integrated functionality to create a codebook (topic modelling) interactively, change and add topics on the go, do quality assurance, and fine-tune the model per project. They might actually use LLMs below the hood, at least for parts of the steps, but have a lot of processes, guard rails, and UI implemented around them.

In addition, they will often provide related functionality as well, like data analysis & visualisation capabilities, chatbots to interact with the results, configurable alerts, etc.

LLMs compare to specialised tools like engines to cars: engines can be used for a wide variety of tasks, and for some people, they are the product they need. Two categories where this applies are hobbyists who like to tinker with them directly for small / toy projects, or large manufacturing companies ready to dedicate significant resources of their specialised engineering workforce to embed them into larger solutions, as it is a core revenue pillar for them.

For most use-cases, a finished car, however, is the better solution – few people want to build the car around the engine if they’re not in the business of selling cars.

Read more about Maurice and Caplena

Maurice Gonzenbach

The following thoughts are from Tim Brandwood of codeit

Purpose-built AI tools for research insights and analytics have a number of important advantages over general purpose LLMs.

Ease of Use

In many aspects of life, it’s important to use the right tool for the job. You could use a hammer to get a screw in the wall, but a drill and a screwdriver would be much easier and do a better job.

A specialist tool is refined and tailored to the exact requirements of a task. The task of coding involves detailed checking, refining, and tweaking. which is difficult to do without a UX optimised for that kind of work.

Working with an LLM can be slow and tedious, with lots of back-and-forth reviewing results and rewriting prompts. This is an ad-hoc process that will vary every time and doesn’t scale well. Researchers have better things to do with their time than this kind of tinkering.

By contrast, a specialist tool gives you a standardised process that can be done quickly and in a standardised, structured way.

Accuracy / Reliability

It’s very difficult to check the accuracy of LLM output without tools for quality control and human supervision. The LLM output is usually not reproducible, making it hard to trust and reuse.

Specialist software will give you the tools you need to produce reliable results and stay in control of quality. They will also use more sophisticated methods and not be solely reliant on LLM output. For example, codeit uses custom machine learning, which is deterministic, meaning it doesn’t hallucinate or give you different results every time.

Lack of Nuance

A generic LLM will often return generic answers and lack the nuance and subtleties needed on a given research project.

A specialist tool can allow a researcher to apply their own domain-specific logic when coding verbatims which will produce much more useful and tailored data for analysis.

Data Security

Generic LLMs may pose a risk of data leakage and can expose sensitive information outside of the organisation.

A specialist tool can provide tighter assurances around where the data is going and how it will be used.

Read more about Tim and codeit

Tim Brandwood

4. Generating Synthetic Personas

The following thoughts are from Nikola Kozuljevic of OpinioAI

Purpose-built AI tools for research will almost always be better than Generic LLMs simply because they can be better controlled (less or clear of bias, prompting, prompt chaining, etc.) and more integrated into existing workflows, tools, and processes.

There’s no doubt that well-built, specialised, agent-enabled AI (that connects with data and workflows) is better than Generic LLMs. Two years ago, this would have been a tougher question to ask. At this point, where this tech is at now, it’s not even a discussion.

In simple terms, it comes down to control and flexibility.

Control

ChatGPT and Perplexity might include many models in their workflows and have different sets of instructions (extra safety) that cannot be found in other models. Because of that, they’re more biased in some ways—depending on how the developer built them. In Proprietary models, you have an open black box to play with, while in open-weight models, you can apply your own set of instructions for it to function.

Ultimately, if you want to get ‘deeper’ into the crevasses of LLMs, explore in depth their knowledge base, data and the cog – you’d require more control to do so. And that’s why generic LLMs can’t beat custom-built LLMs – as custom-built can be specifically built for the task at hand.

The same logic applies to ChatGPT vs. any API wrapper or fine-tuned model solution (like OpinioAI is)—you have more control over the prompt, prompt chaining, reasoning, and so on. There are some things you simply cannot do with ChatGPT that you can do with direct API access to proprietary models.

Flexibility

With Agentic AI workflows (connecting various different MCPs—toolsets for the agents), you can expand AI into very different ways and use cases. Importing a variety of data sets, multiple sources, sending functions, and outputs—all automatically and programmatically—is something you simply cannot do in generic LLMs.

Read more about Nikola and OpinioAI

Nikola Kozuljevic

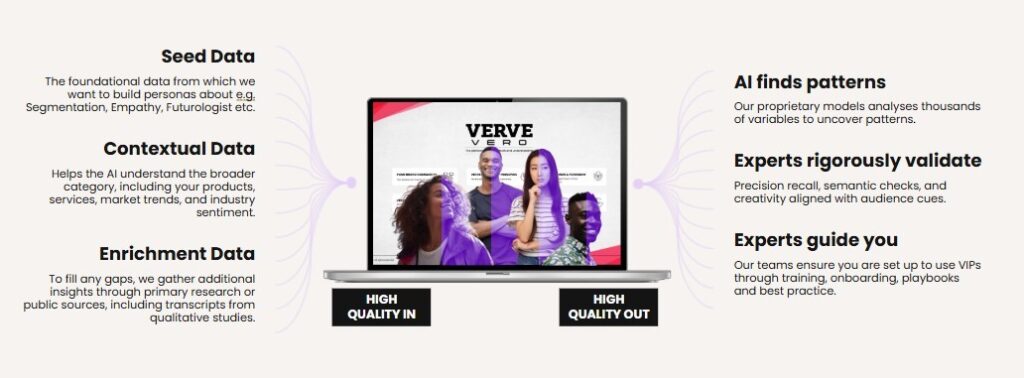

The following thoughts are from Rich Preedy of Verve

General-purpose LLM tools (like ChatGPT or Claude) can be useful for generating quick, surface-level personas. However, because they’re designed for general use, they lack the depth and nuance to accurately simulate real people.

These “synthetic personas” typically rely heavily on broad, general data contained within the LLM’s training set or data that has been scraped from the internet. While they may seem plausible when you first interact with them, it quickly becomes clear that they lack depth – often reflecting stereotypes or over-generalised assumptions. By contrast, specialist tools built for research and insights can produce simulated personas that are more robust, believable, and accurate.

Verve Intelligent Personas (VIPs) are designed around specific tasks – like audience understanding or concept testing – and built with trusted data sets, including proprietary primary research data and rich, curated contextual data, all processed using Verve Maven – our semantic analysis tool.

This produces simulations that are far more accurate than general-purpose LLM tools can create, with Verve Intelligent Personas (VIPs) achieving up to 90% accuracy vs. real audience responses. For research and insight, this means greater transparency, better alignment with evidence, and more robust and defensible outputs —particularly when personas are being used to inform strategy, product development, or creative work.

Read more about Rich and Verve

Rich Preedy

5. Creating Insights Knowledge Repositories

The following thoughts are from John Ferreira of Finch Brands

ChatGPT, Gemini, and Copilot don’t offer your organisation any competitive advantage. All your competitors have access to the same public data that you do through those tools. True competitive advantage emerges at the intersection of your proprietary data and public data.

Betting on a single generic LLM is like putting all of your savings in a single stock. There are times when it will be a leader and times when it will be a laggard. With knowledge management, a much smarter strategy is to take a portfolio approach. Purpose-built AI tools for research & insights like Finch Brands’ Charlie® are built with a layered, multi-LLM architecture that assigns each LLM to different tasks based on best-in-class functions.

Hallucinations are a real concern when it comes to LLMs, and orienting your organisation around a single LLM is a surefire way to maximise the incidence of hallucinations when you’re trying to make critical decisions. Purpose-built knowledge management systems are built on a multi-LLM architecture, where each LLM checks the work of other models, weighing and critiquing it before the results even hit your screen. The result is a dramatic reduction in the rate of hallucinations.

Enterprise LLMs like Copilot are great for individual productivity, but they are not designed for organisational customer centricity. Those are fundamentally different lanes with different objectives in your organisation. Purpose-built knowledge management solutions like Charlie® can help you chart the course for where you should place your bets, while focused LLMs like Copilot help you rally the team to get there faster.

Read more about John, Finch Brands & Charlie

John Ferreira

Charlie™ AI

The following thoughts are from Joseph Rini of Market Logic Software’s DeepSights AI

When it comes to AI-powered knowledge management for insights, generic LLM solutions like Microsoft Copilot and Google Gemini can have a role to play. Both are robust within their respective ecosystems (Microsoft Office 365 and Google Workspace), offering strong data integration capabilities.

However, they generally lack the necessary customisation for specific market insights applications and do not typically integrate with the sorts of external sources and partners that insights teams need to work with.

They are also not bound to provide responses based on your proprietary knowledge base. Rather, they may provide responses based on web content, emails, or other internal documents it comes across in their respective environments.

Specialised AI tools for research and insights will typically have a number of important advantages.

Insights ecosystem integration: they connect to your entire insights data ecosystem and can pull in all your sources and easily integrate insights into any system you use – both in the insights department and in stakeholder teams.

Understanding market research: a major challenge in interpreting market research presentations is accurately identifying and handling different types of information, such as study background, sample descriptions, questionnaire statements, respondent quotes, research findings, and vendor pitches. Generic AI tools usually don’t recognise these nuances, so they can make significant errors – like mistaking questionnaire statements or individual quotes for representative data or misinterpreting sample compositions as actual consumer numbers.

Relevant source ingestion and combination: specialist AI tools have a deeper understanding of market research data through features like visual extraction – going beyond text by analysing visual data like graphs, charts, and infographics. By combining visual and text-based data, they are more likely to be reliable and accurate. Specialist AI tools also reference multiple sources by combining findings from primary, secondary, and news sources to provide a more cohesive response.

Awareness of contextual applicability: consumer, category, brand, and market knowledge is highly context-dependent and perishable, meaning insights valid in one market or time may not apply elsewhere or later. An AI ignoring these factors may offer irrelevant information. For example, imagine an AI tool applying pre-Covid Italian consumer attitudes to present-day England. Without your context-dependent knowledge, such misunderstandings are likely to happen.

Customer-specific context and best practices: it is crucial to customise the AI by providing customer-specific context for each business to accurately reflect corporate context and best practices. These settings include company-specific jargon, background knowledge, and guidelines on data usage. Ideally, your Gen AI platform allows individual users or admins to configure such settings, enabling the AI to adapt to their specific context.

Intuitive user experience: does your Gen AI platform effortlessly integrate complex AI processes, allowing you to input questions or problems and receive optimal responses? A design that eliminates the need for user training and includes robust interaction guardrails to prevent misuse from inadequate prompts should be prioritised.

AI watchouts: specialist AI tools exhibit higher levels of insights awareness by highlighting inconsistencies and limitations in sources – helping users quickly identify areas that may need closer attention.

Source selection: a specialised Gen AI market insights platform lets users customise answers by choosing which sources to include or exclude, for instance, narrowing the focus by removing sources or broadening it by adding more.

Control for insights teams: specialist AI tools have user admin control centres designed for insights teams – so they can manage access permissions, configure settings and set up new users intuitively – without needing to rely on IT or engineering teams.

Read more about Joseph, Market Logic & DeepSights

Joseph Rini

DeepSights™ Assistant

Final Thoughts on AI Tools for Research

General-purpose LLMs clearly have their place in day-to-day tasks; but if you want real value for insights use cases, purpose-built AI tools for research are clearly the way to go.

Security, accuracy, consistency, flexibility, nuance, precision, control, and reliability are just some of the clear advantages.

So next time your CMO, your client or your finance director asks, “Why can’t we just use ChatGPT for that – and save a load of money?” you have all the answers you need right here.